Table of contents

- The flagship of this time...

- 2008.11.20. WatchingFilmAboutFreeSoftware

- 2008.10.12. Visual map of the lab ceiling for localization method

- 2008.10.10. The Robotics Club

- 2008.10.03. Localization using MonteCarlo Method

- 2008.09.20. Localization experiments stats

- 2008.09.20. Small noise using Montecarlo localization

- 2008.09.16. Big noise using Montecarlo localization

- 2008.08.29. Robot Pioneer environments

- 2008.06.25. Films projection simulator

- 2008.06.15. Grant holder by ME Collaboration Project: Computer Vision

- 2008.06.07. [Bonus track] Aibos teleoperated with the Wiimote. The Final Cup

- 2008.05.25. VFF local navigation using the real Pioneer

- 2008.05.20. GPP global navigation using the real Pioneer

- 2008.04.30. Pioneer robot avoiding walls

- 2008.04.28. Glogal GPP simulations: Player/Stage VS. OpenGL

- 2008.03.17. Fluids simulation

- 2008.03.13. Testing CUDA library

- 2008.03.01. Modeling raytracing

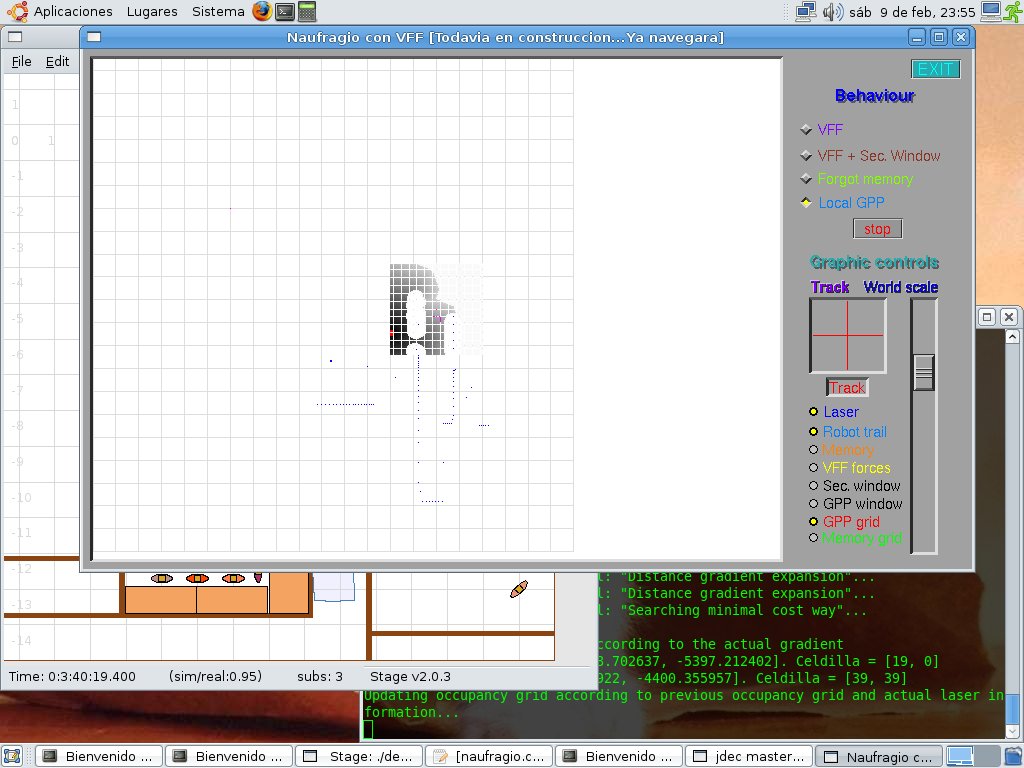

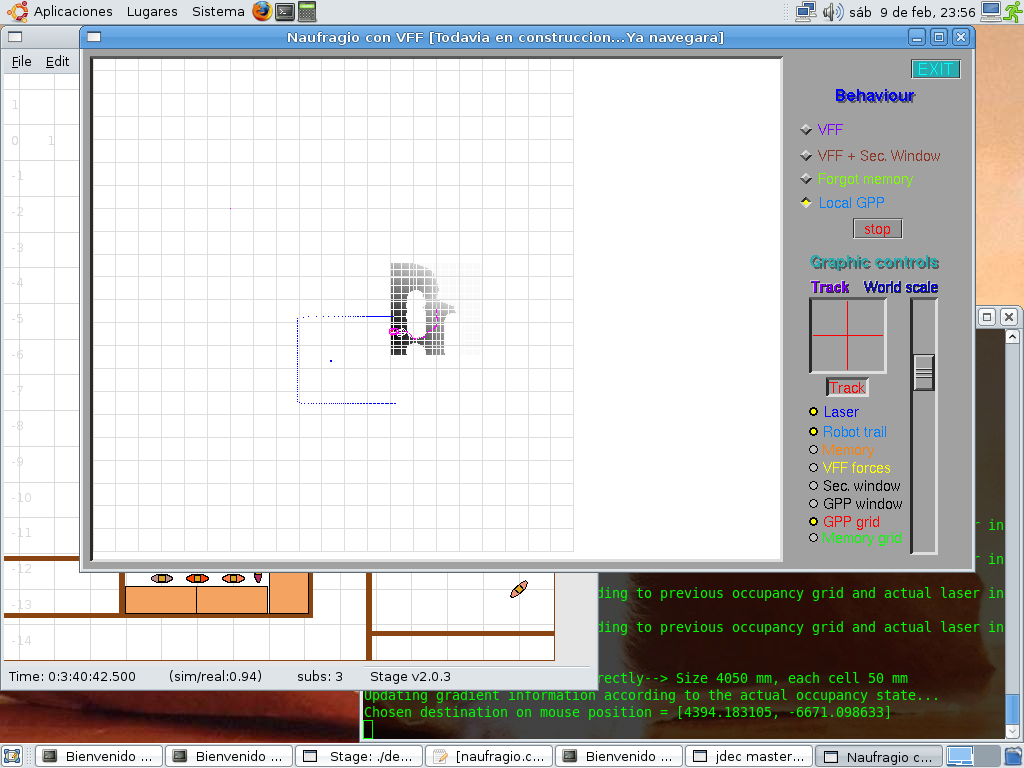

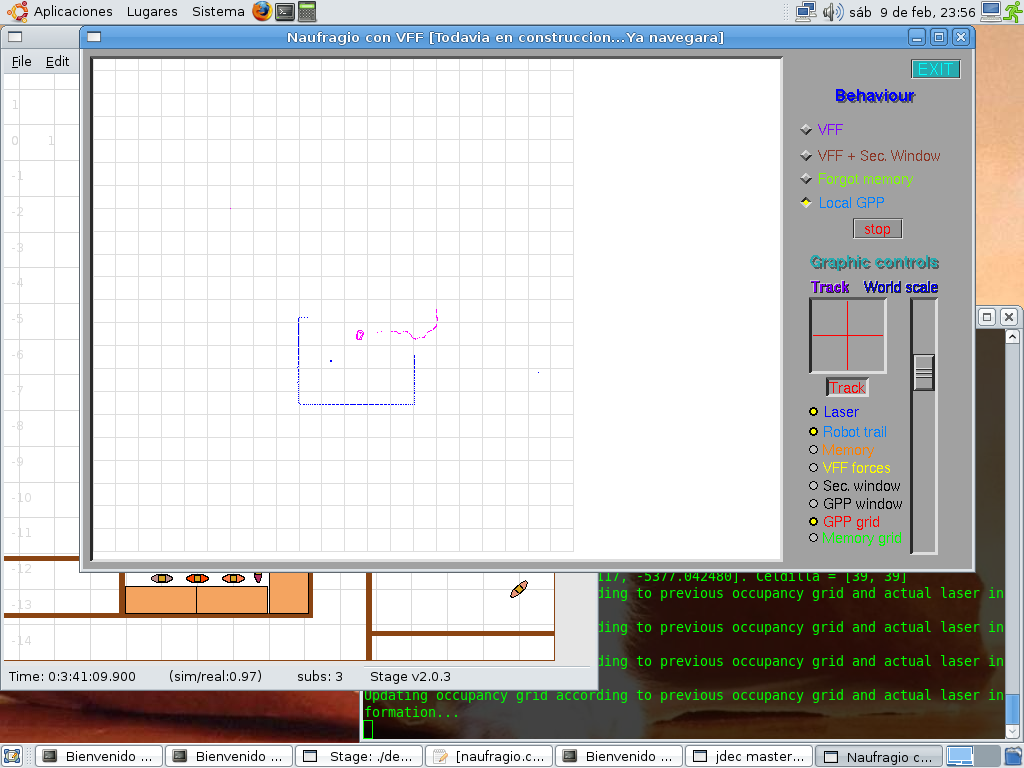

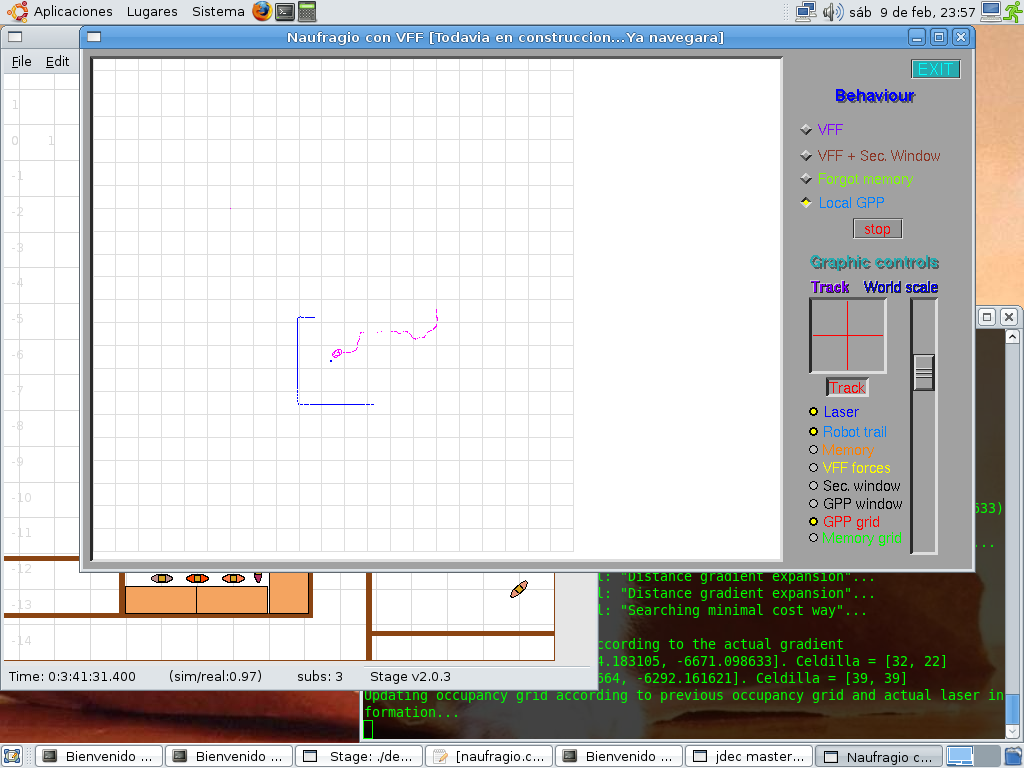

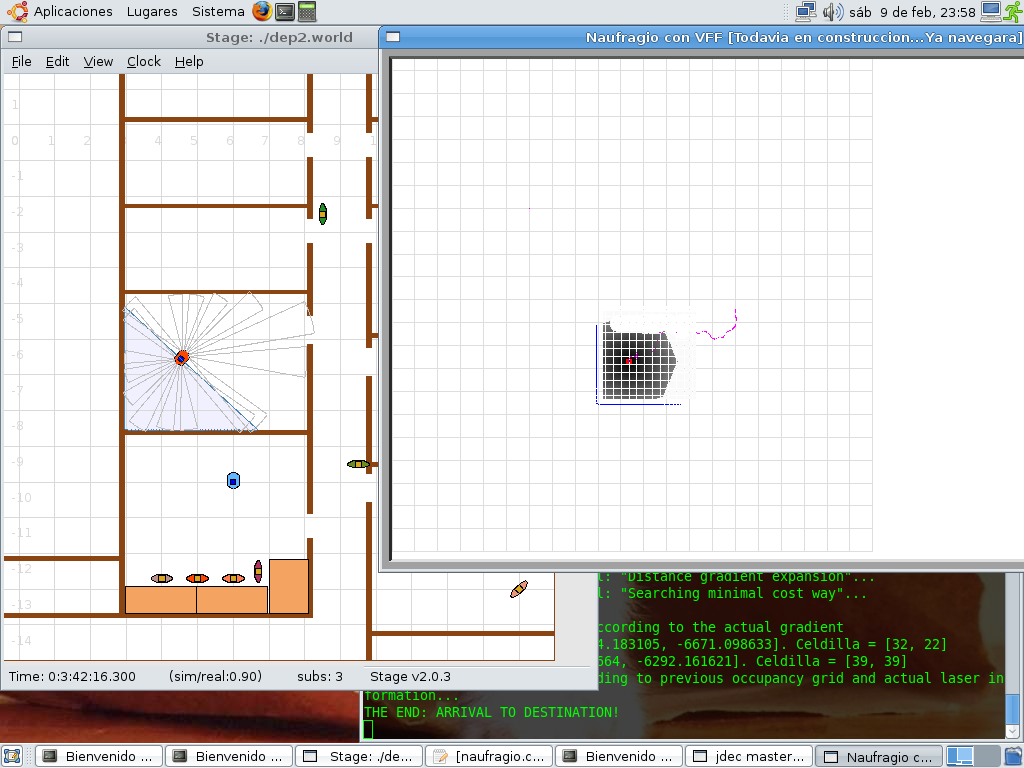

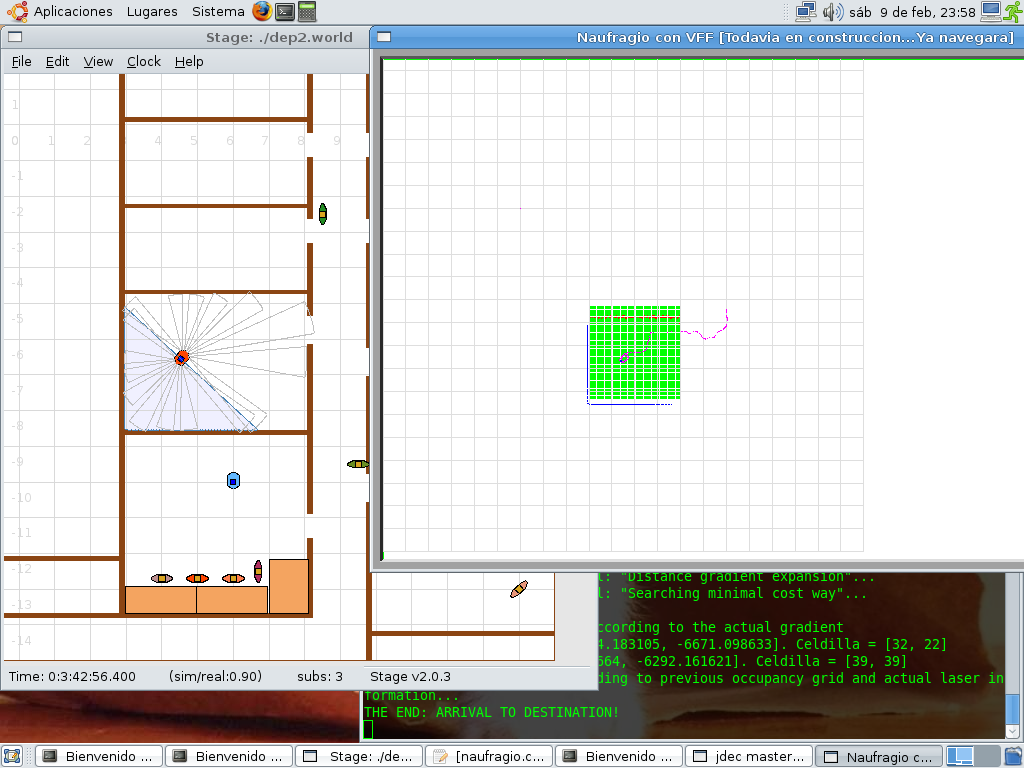

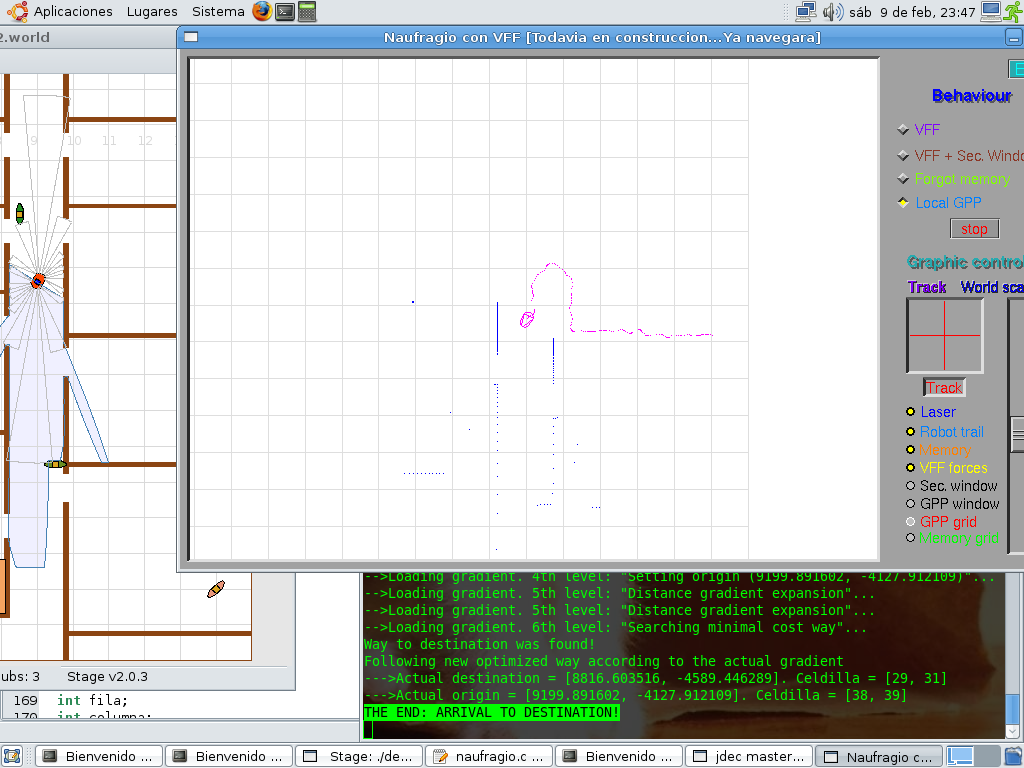

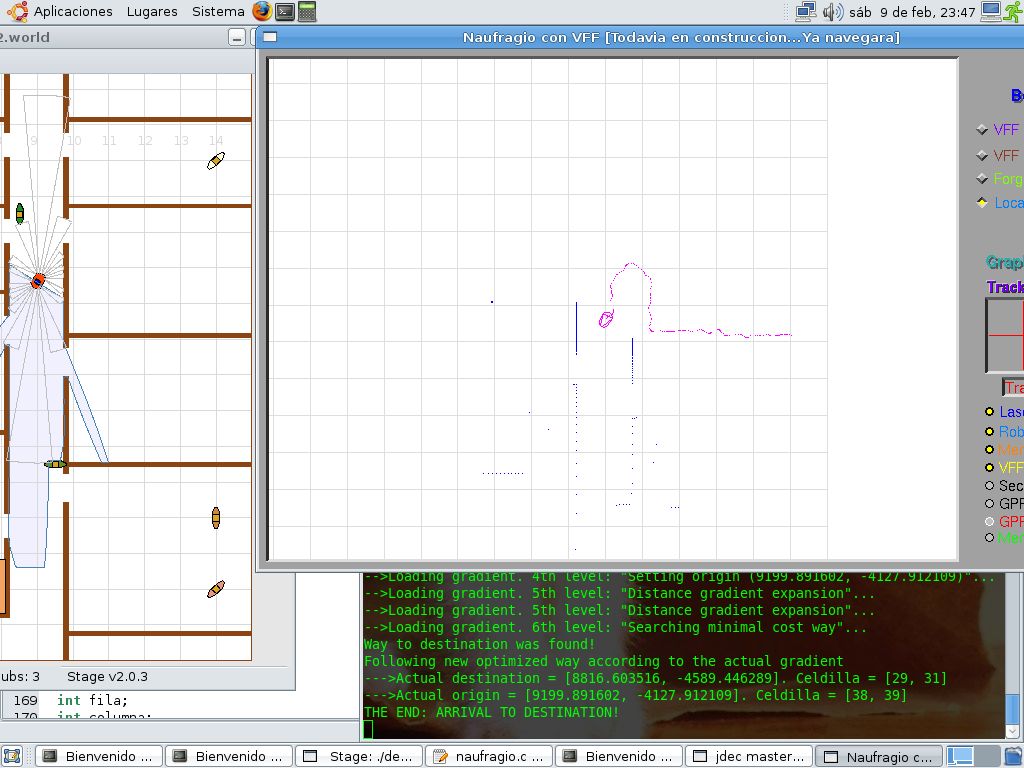

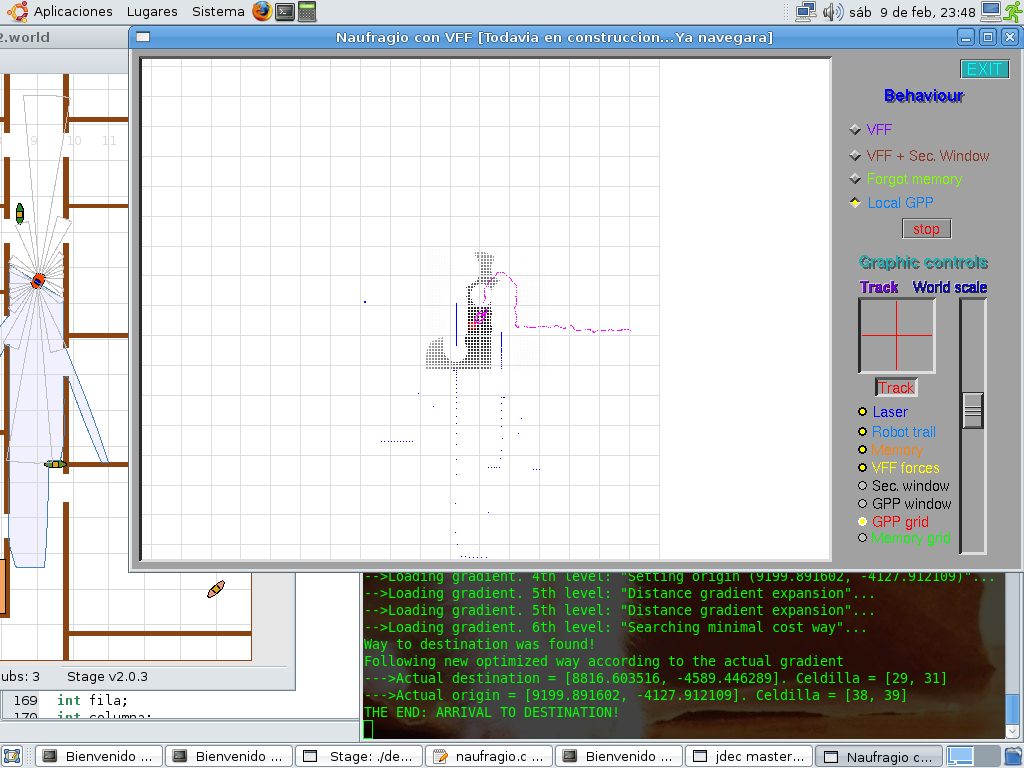

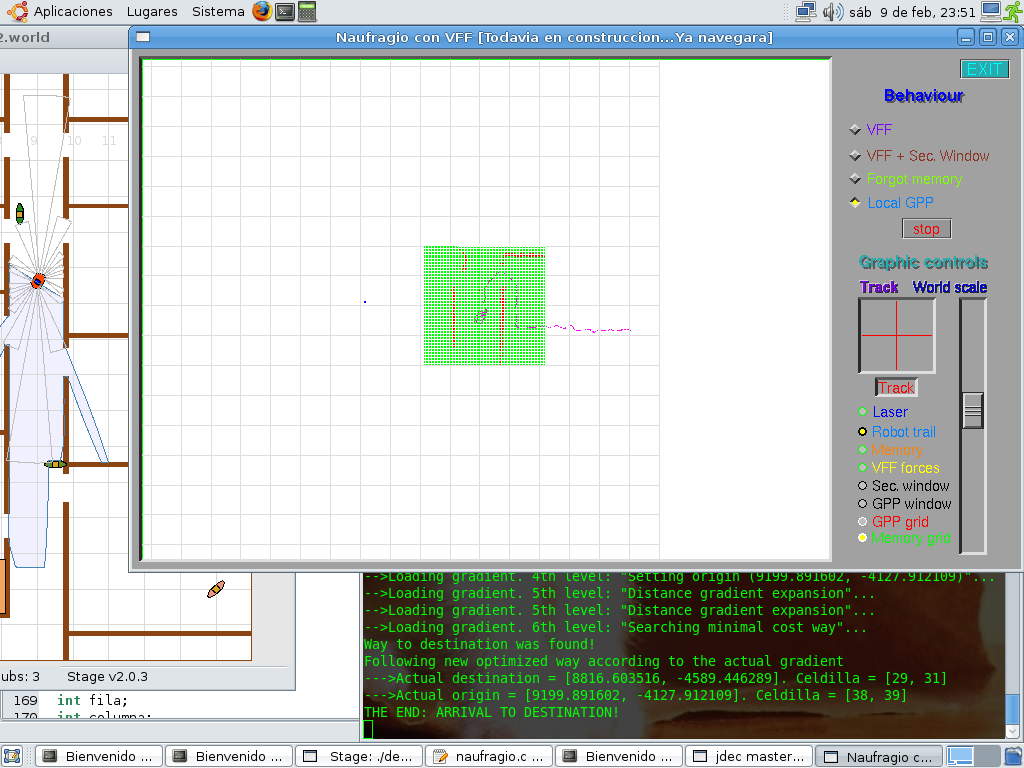

- 2008.02.09. GPP behavior with obstacles

- 2008.02.09. GPP behavior on doors

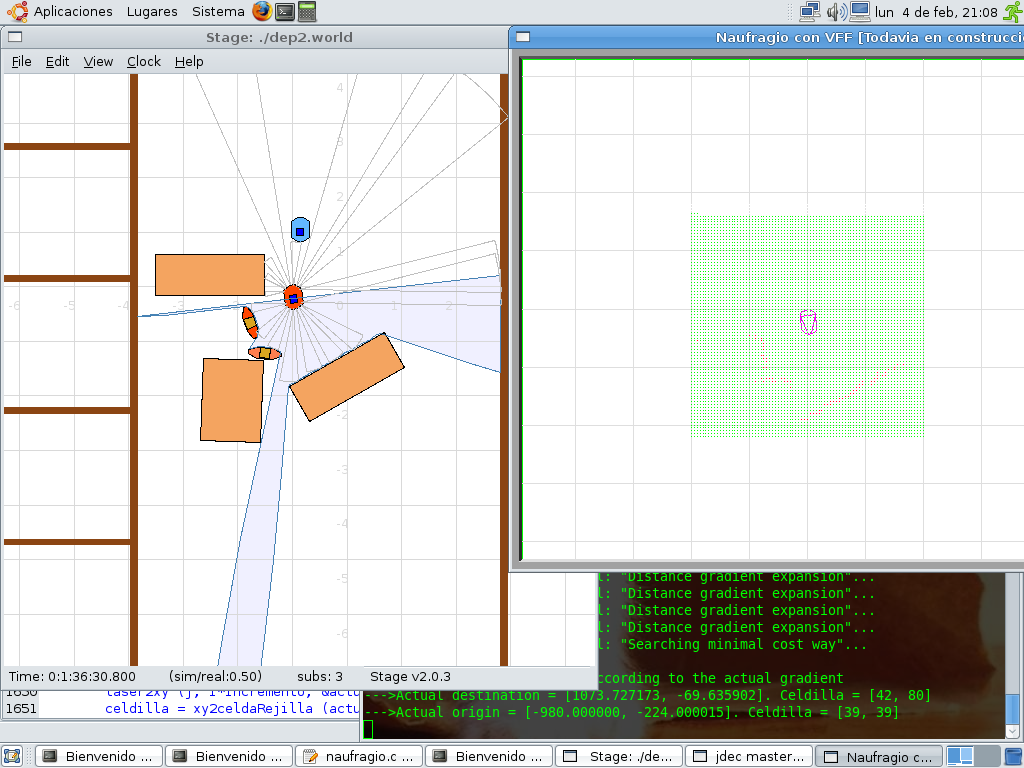

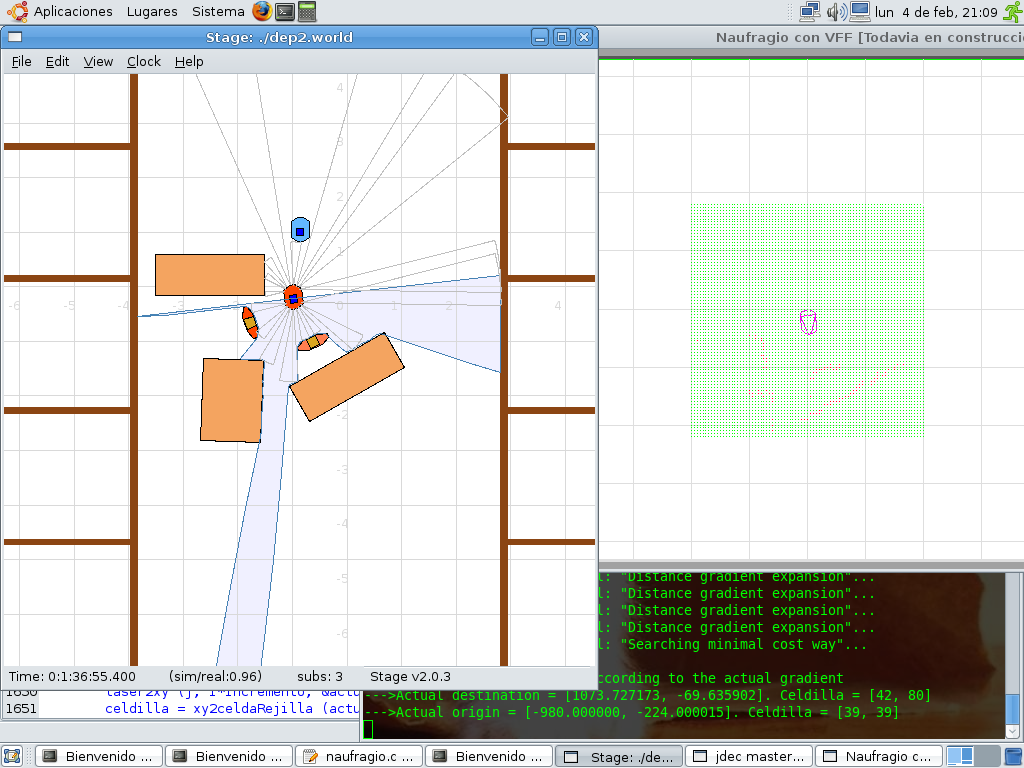

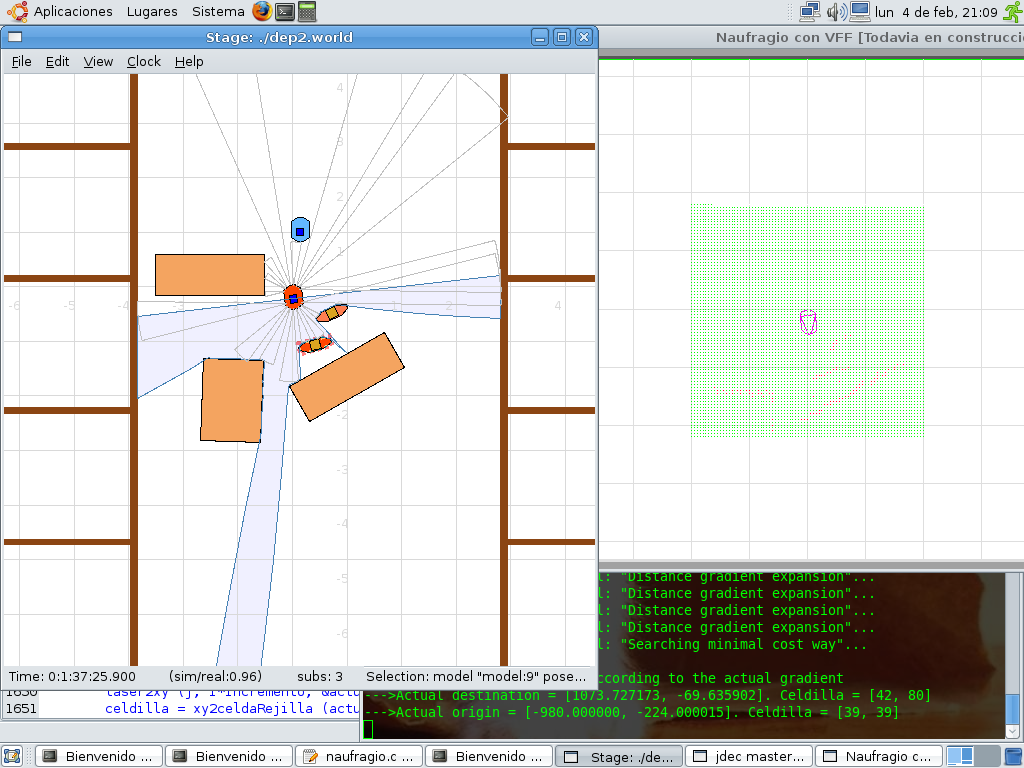

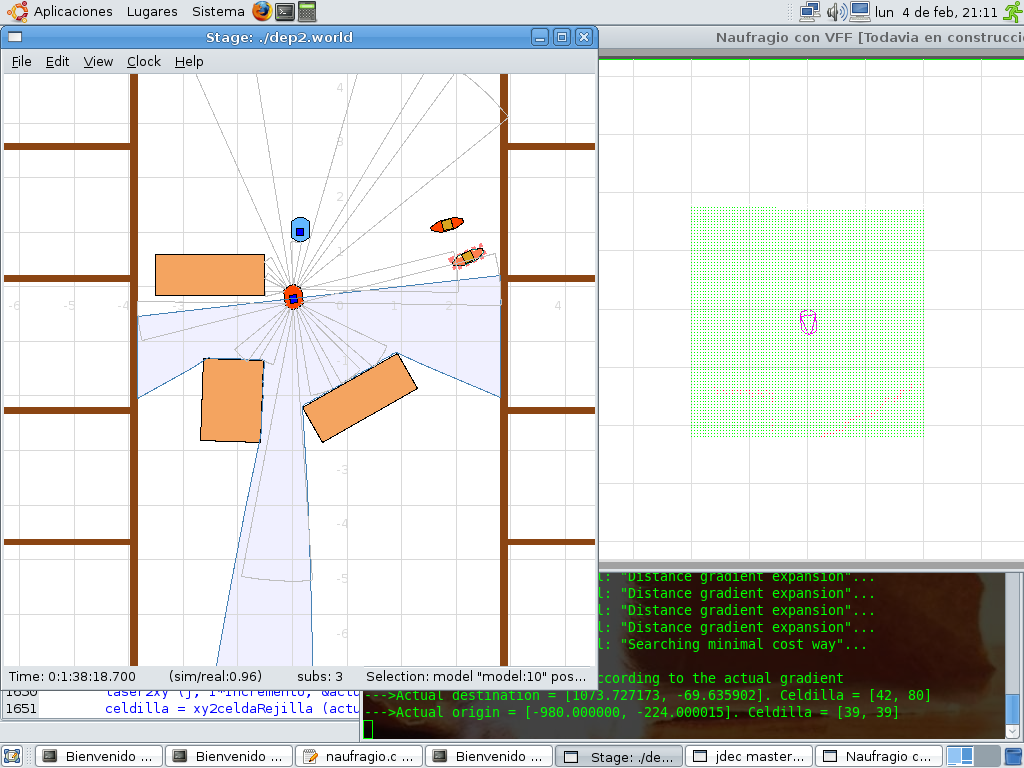

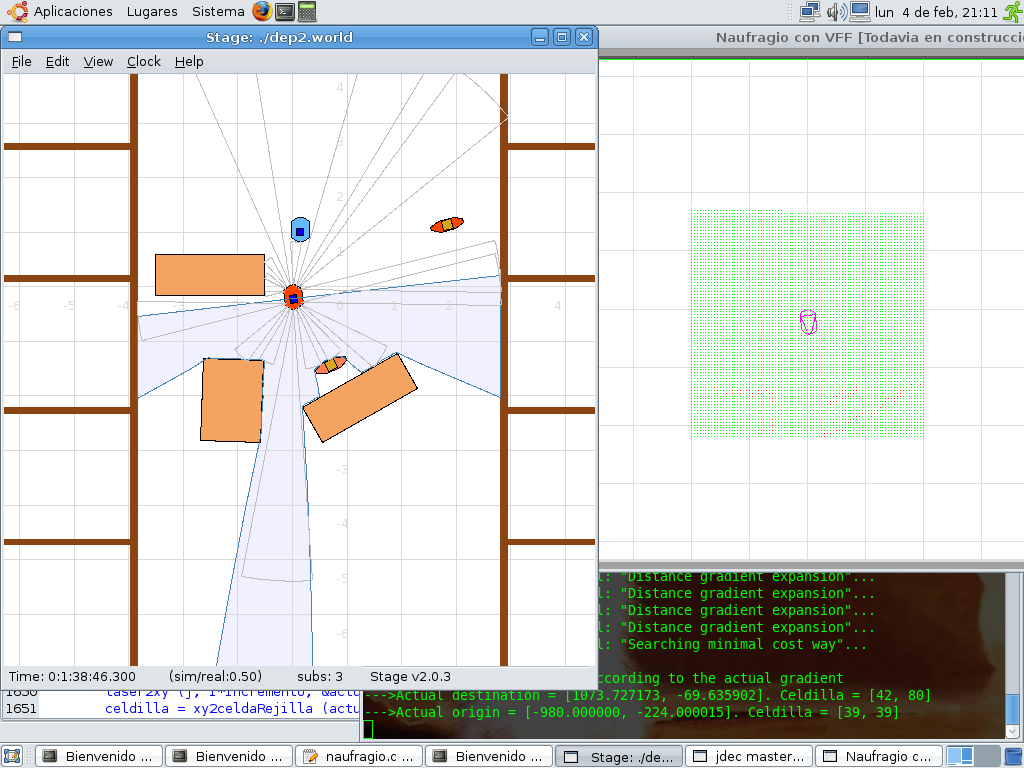

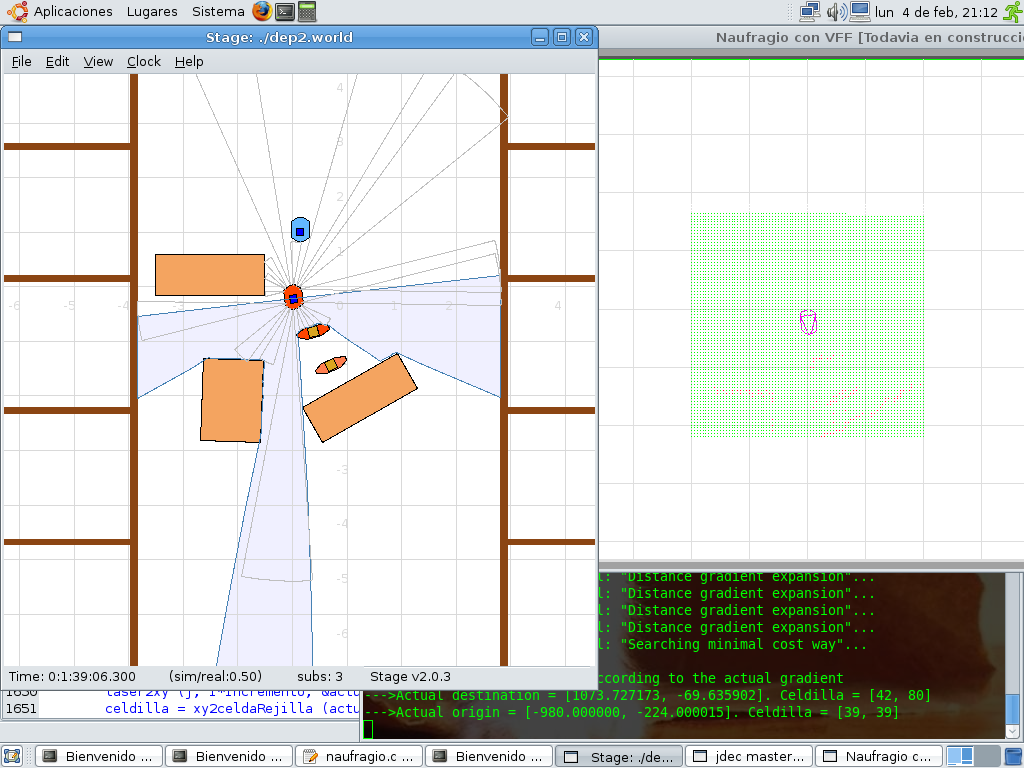

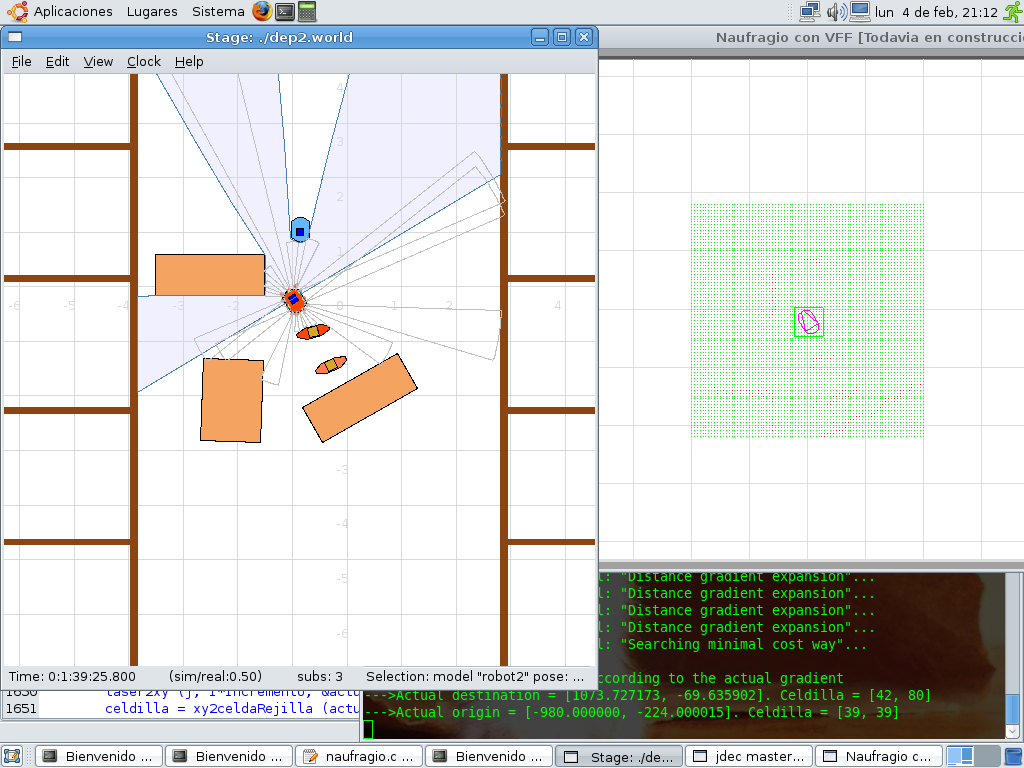

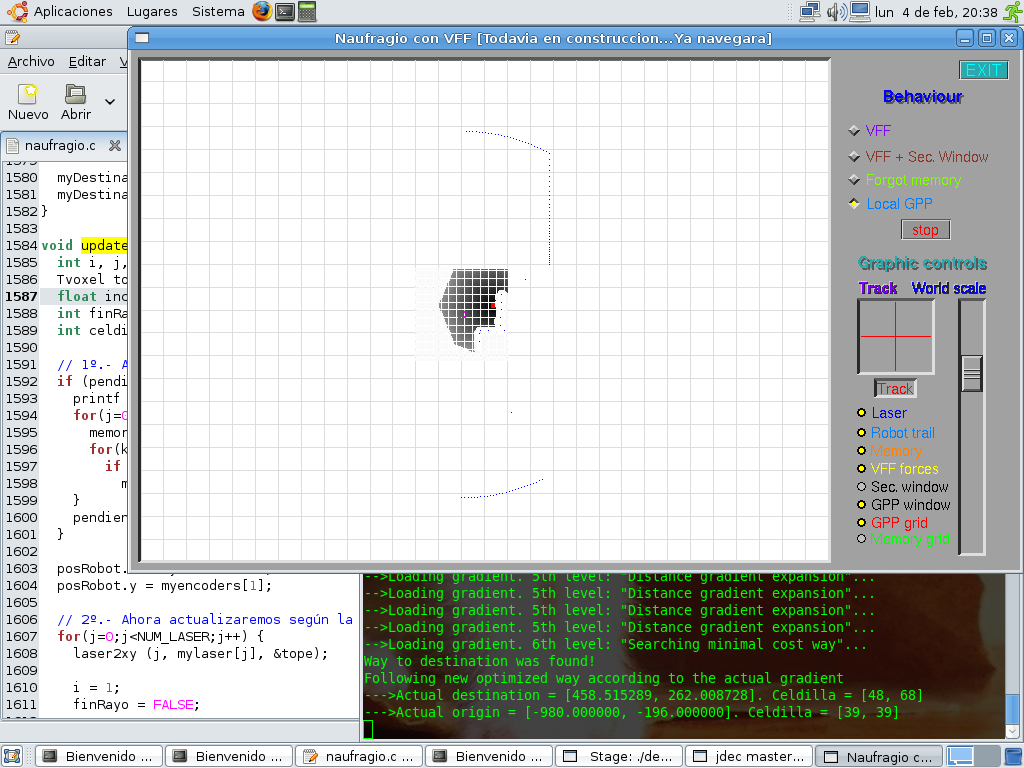

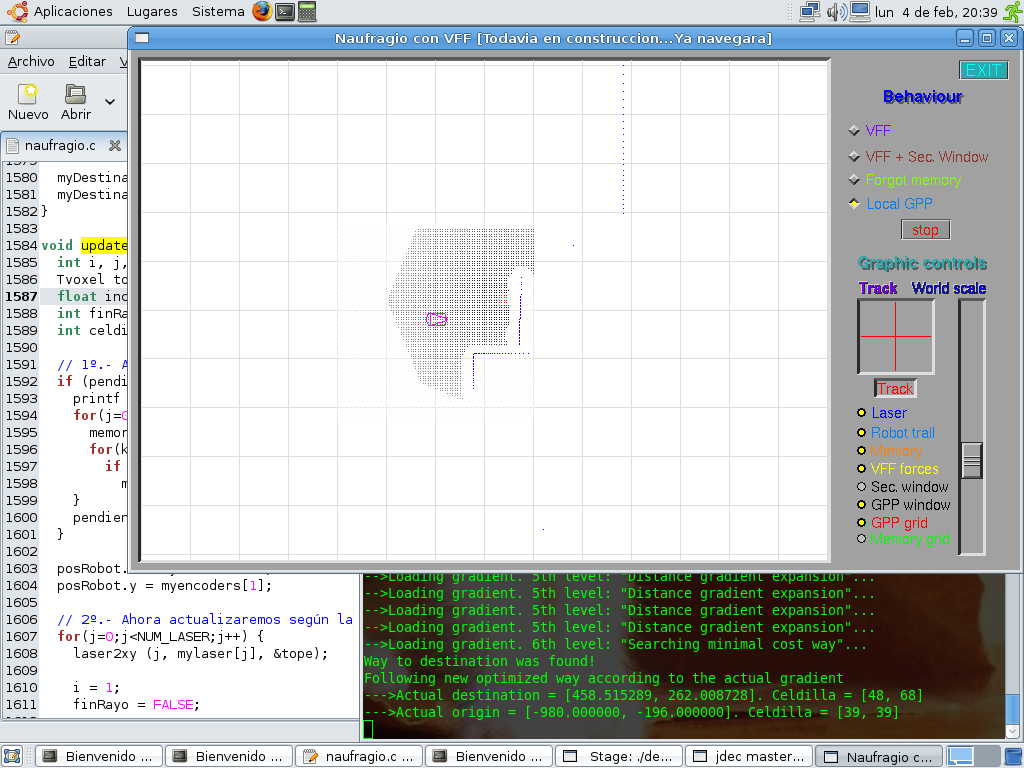

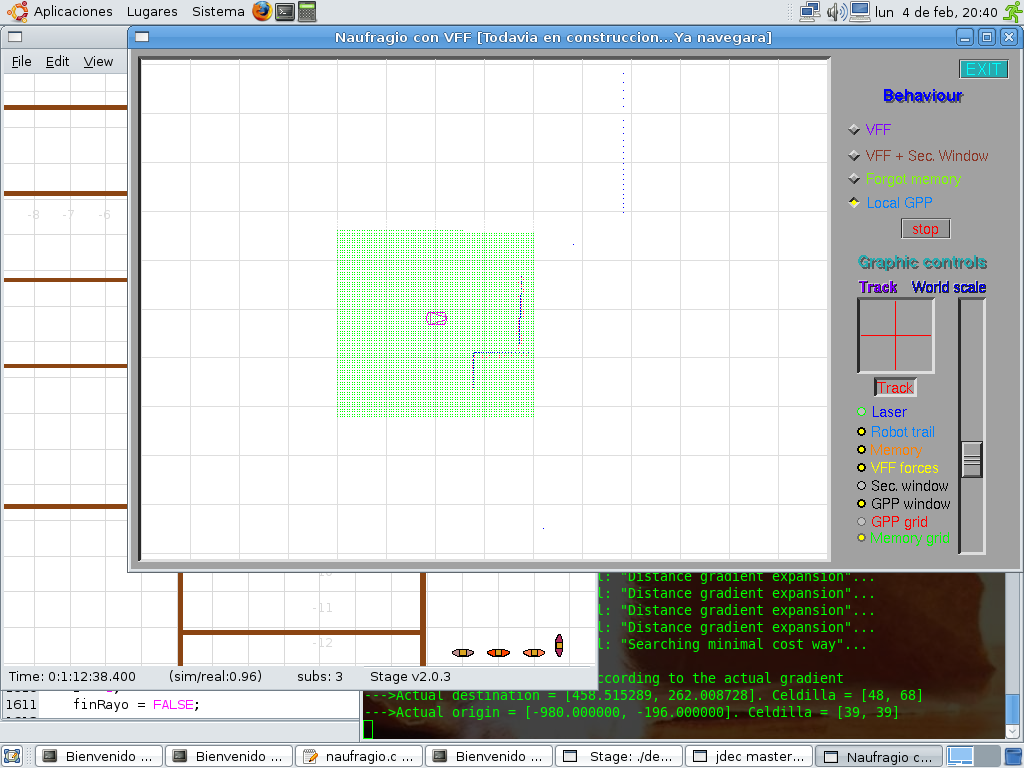

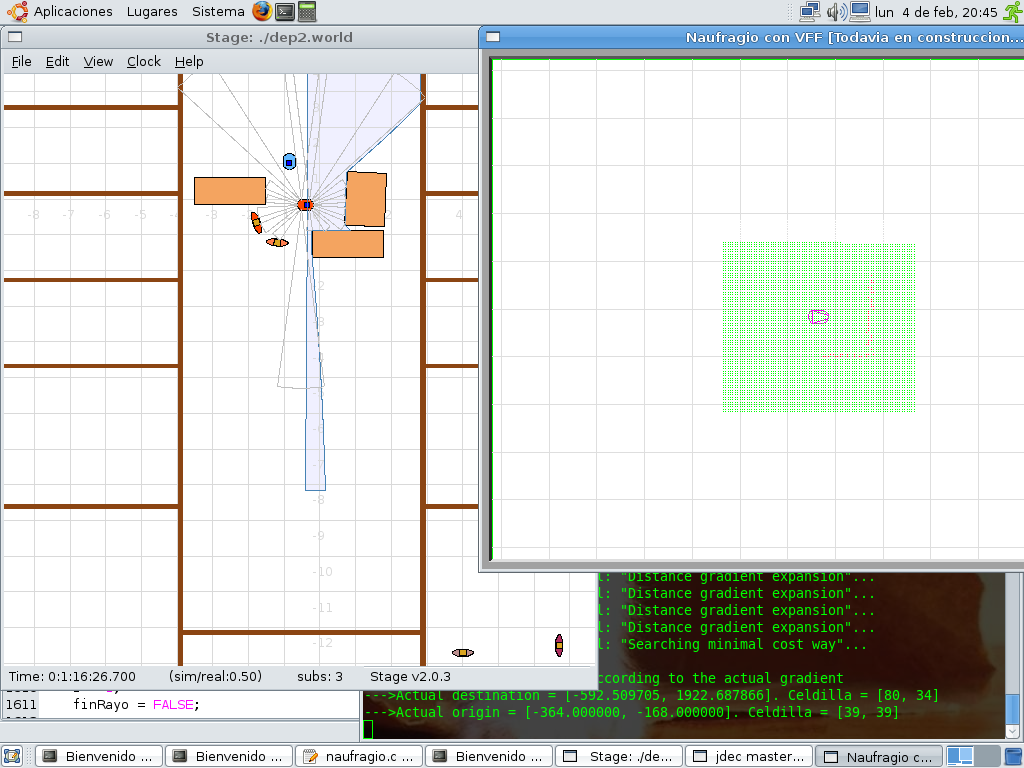

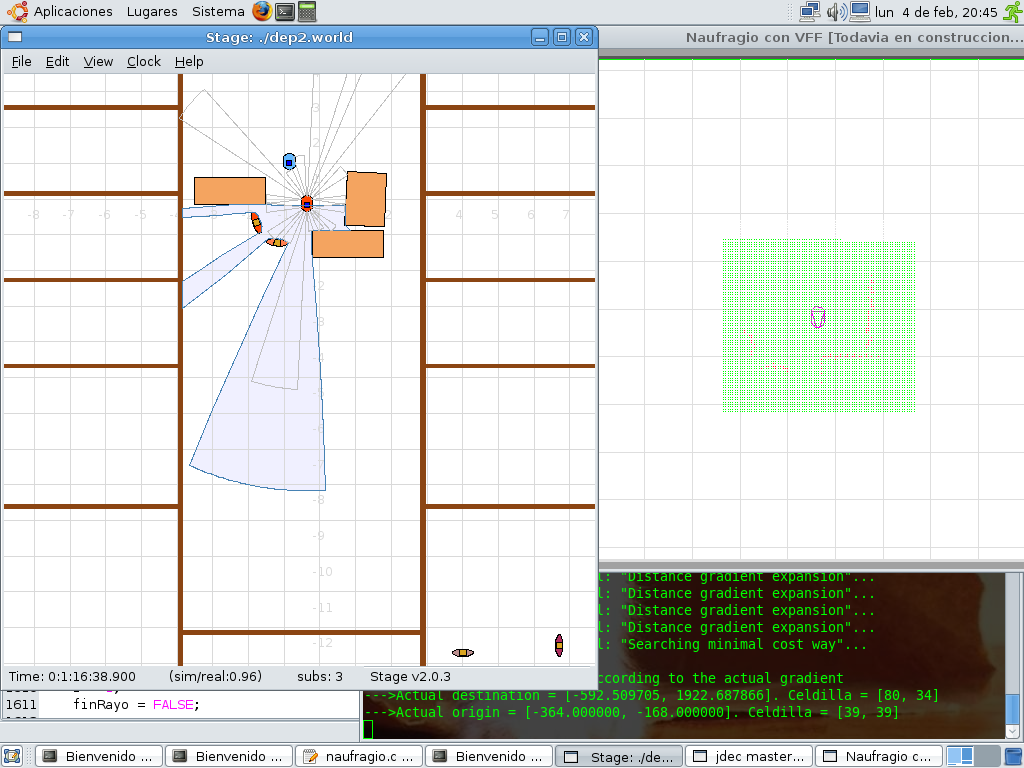

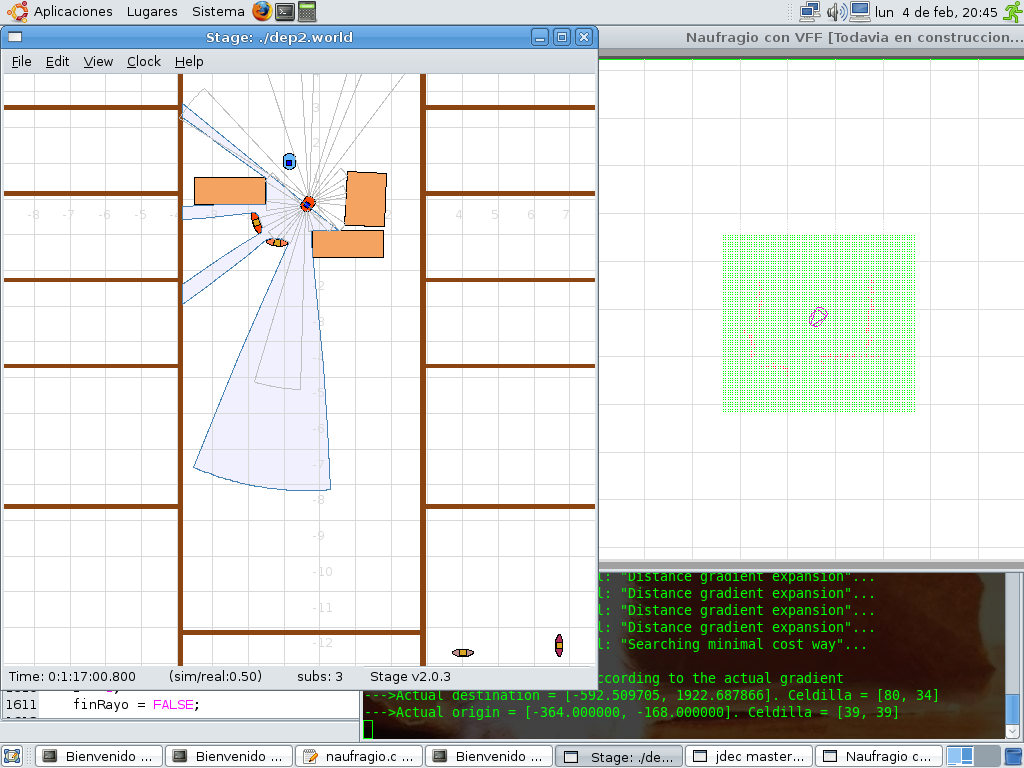

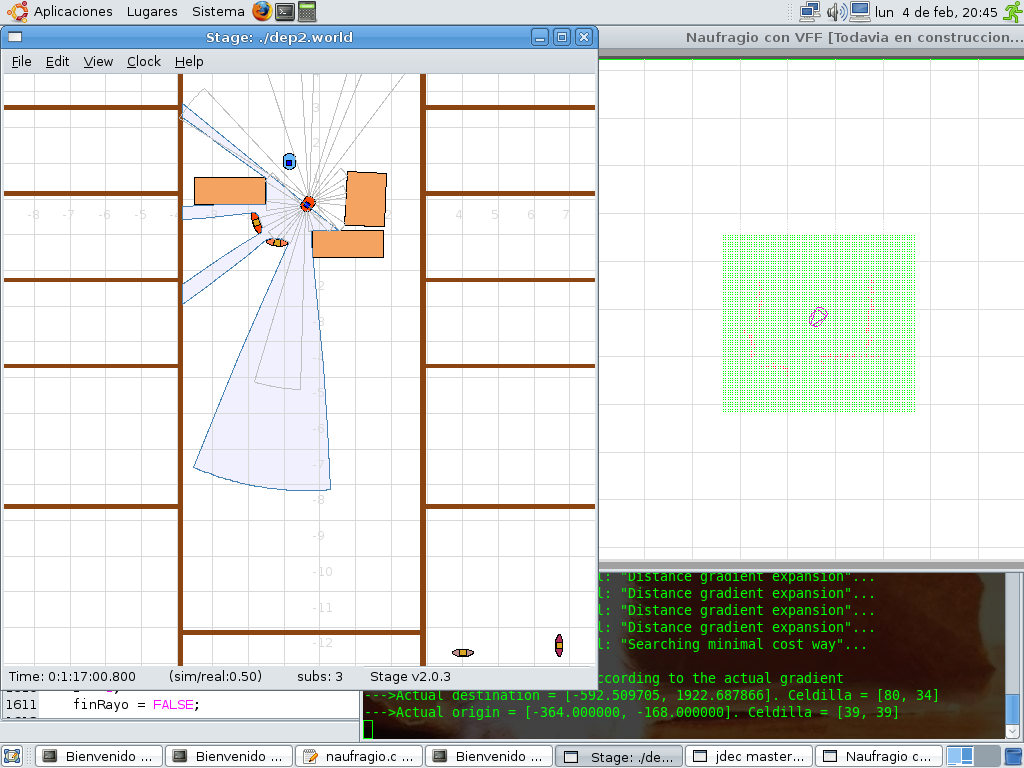

- 2008.02.04. Occupancy grid behavior

- 2008.02.04. GPP global navigation with obstacles

- 2008.01.31. GPP with doors on Player/Stage

- 2008.01.30. Local GPP

- 2008.01.06. Physics simulation

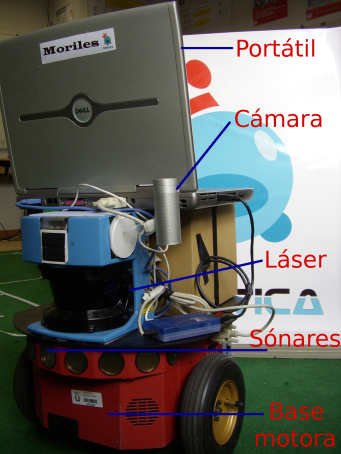

The flagship of this time...

2008.11.20. WatchingFilmAboutFreeSoftware

2008.10.12. Visual map of the lab ceiling for localization method

2008.10.10. The Robotics Club

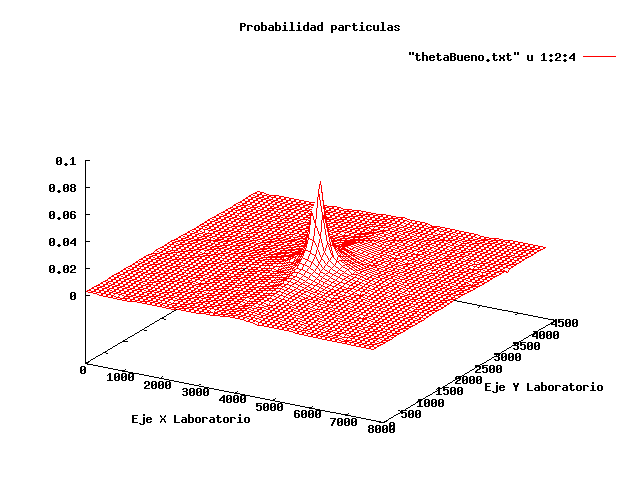

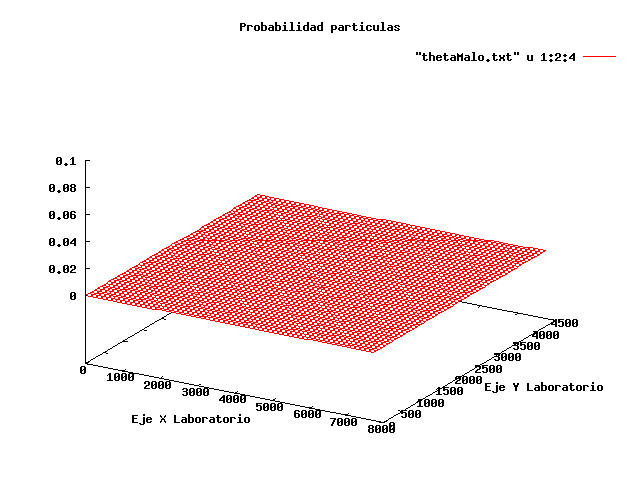

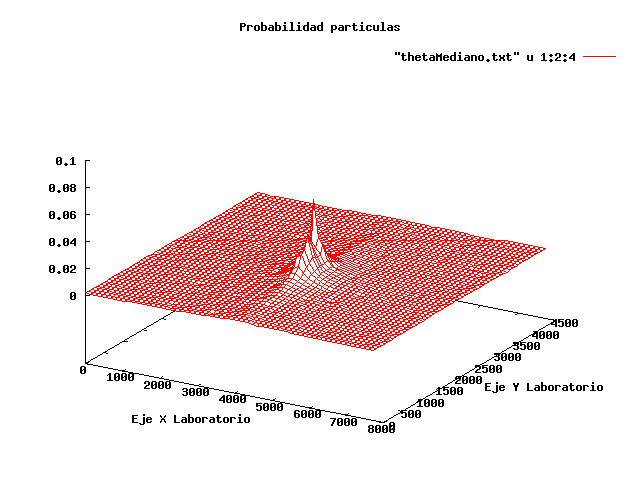

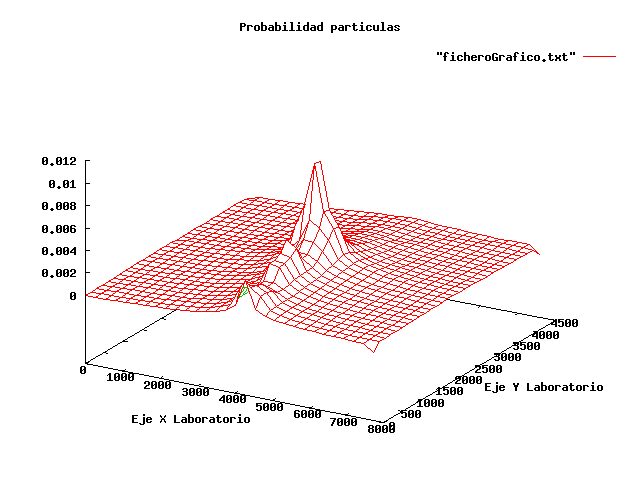

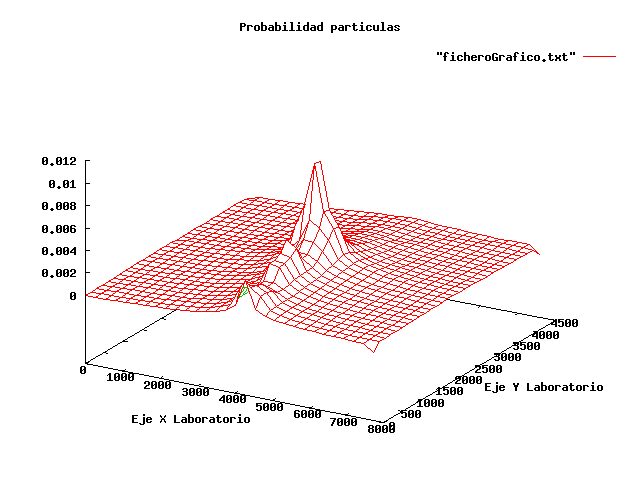

2008.10.03. Localization using MonteCarlo Method

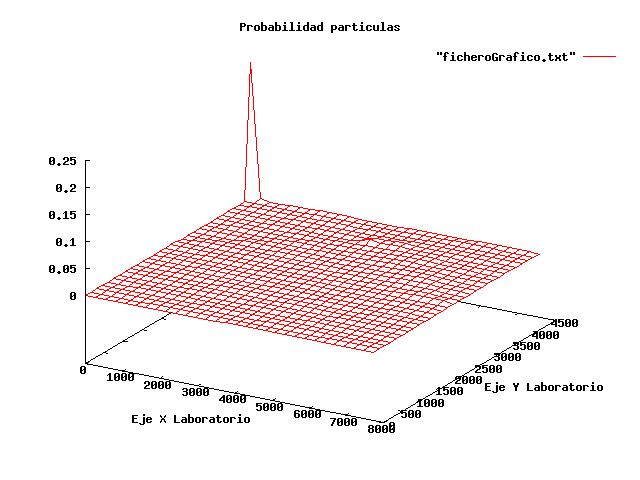

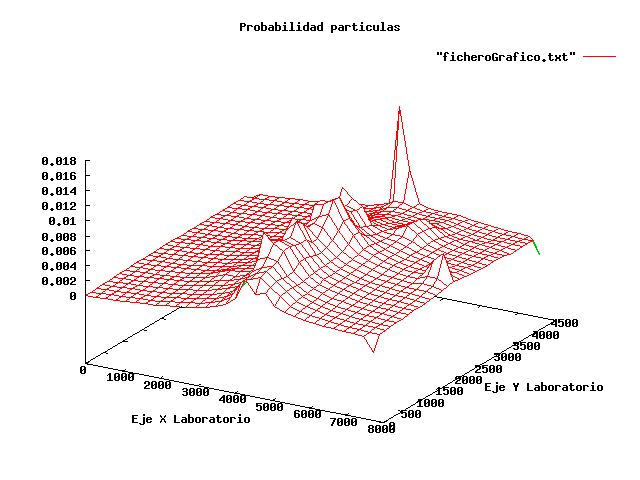

2008.09.20. Localization experiments stats

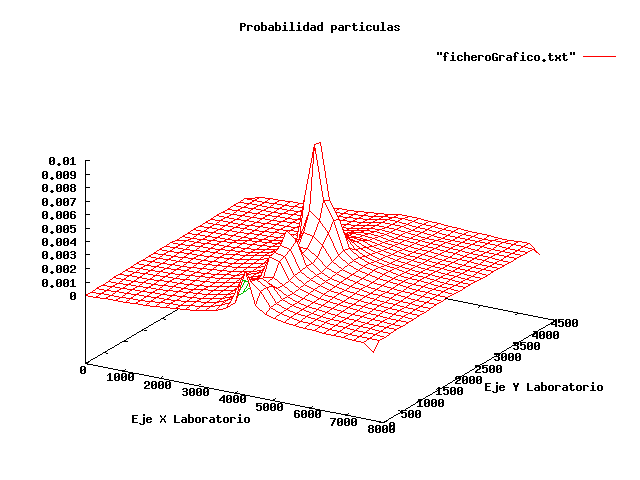

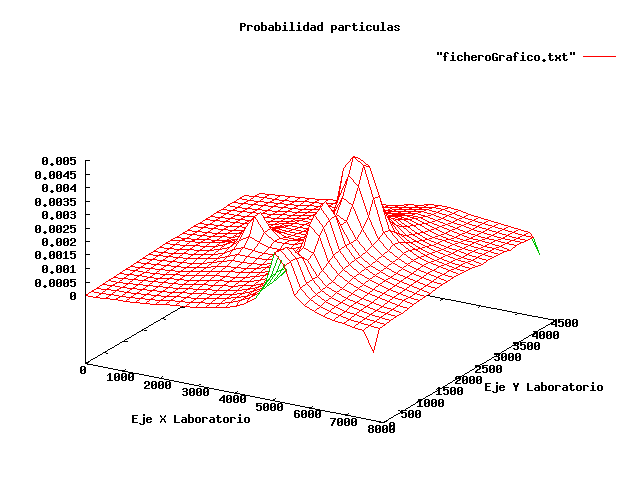

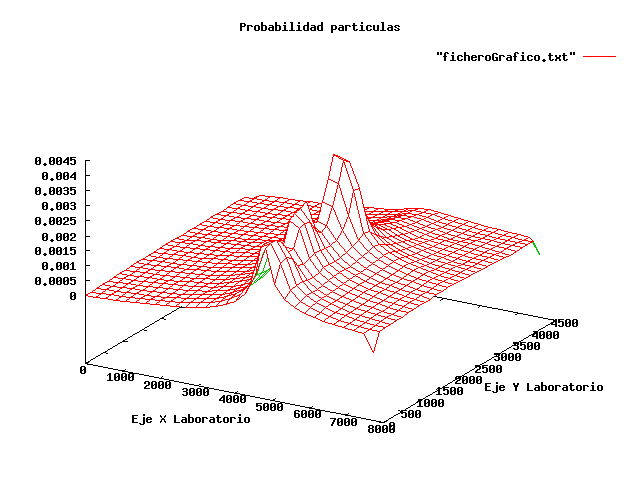

2008.09.20. Small noise using Montecarlo localization

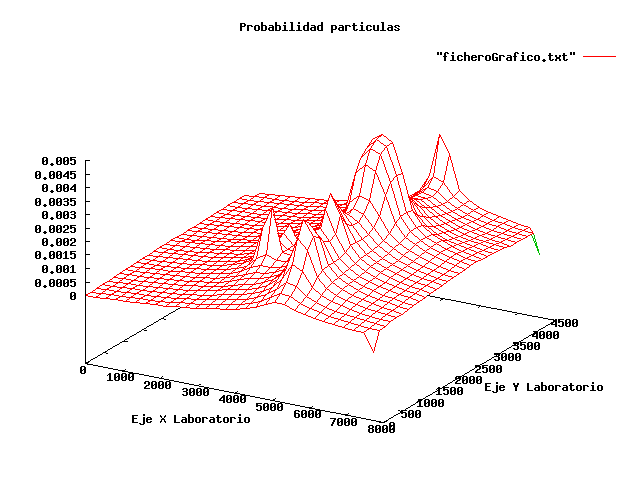

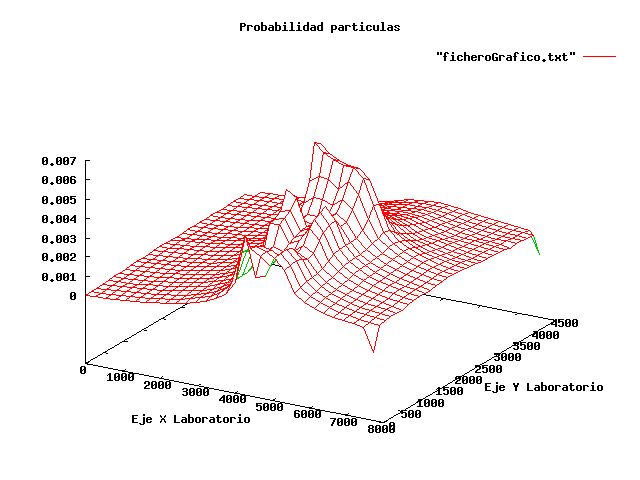

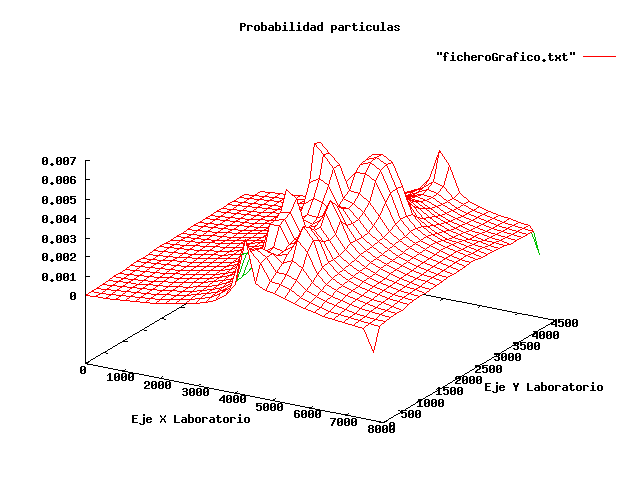

2008.09.16. Big noise using Montecarlo localization

2008.08.29. Robot Pioneer environments

2008.06.25. Films projection simulator

2008.06.15. Grant holder by ME Collaboration Project: Computer Vision

Along this course I've been developing a project according to which I've tried to simulate a laser sensor with a common webcam. This method is typically called 'visualsonar'. Now, the robot is able to detect surrounded obstacles. The final robot behaviour is as following:

- It's needed to separate floor colour and coloured objects.

- Then, we can obtain an image composed by borders.

- The only interesting border is the bottom border, which means the frontier between floor and obstacles.

- Using computational geometry algorithms, we can estimate distances between robot position and obstacles.

All developments have been highly detailed on this final technical report.

2008.06.07. [Bonus track] Aibos teleoperated with the Wiimote. The Final Cup

2008.05.25. VFF local navigation using the real Pioneer

[Bonus track] The making off...

2008.05.20. GPP global navigation using the real Pioneer

[Bonus track] The making off...

2008.04.30. Pioneer robot avoiding walls

2008.04.28. Glogal GPP simulations: Player/Stage VS. OpenGL

2008.03.17. Fluids simulation

2008.03.13. Testing CUDA library

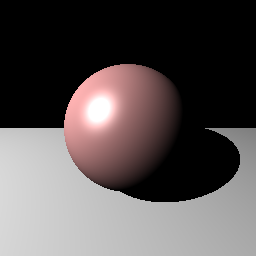

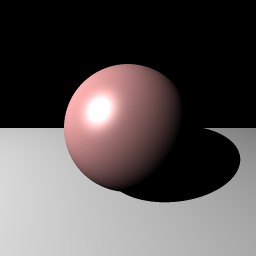

2008.03.01. Modeling raytracing

What's Raytracing? It's a method that allows you to create photo-realistic images on a computer.

It attempts to trace the paths of light that contribute to each pixel that make up a scene. Instead of computing visible surfaces, determine intensity contributions. Furthermore, it compute global ilumination.

In our example, the first image show a single ball iluminate with a single light. Here we've calculate only local ilumination and shadows. The second image is the same example, but getting several samples per pixel; so the result is a more clear image.

Now, we've two balls and different lights. So we've to calculate global ilumination and other natural effects like reflection or refraction. The second image is the same example, but getting several samples per pixel; so the result is a more clear image.

2008.02.09. GPP behavior with obstacles

2008.02.09. GPP behavior on doors

Entrance

Exit

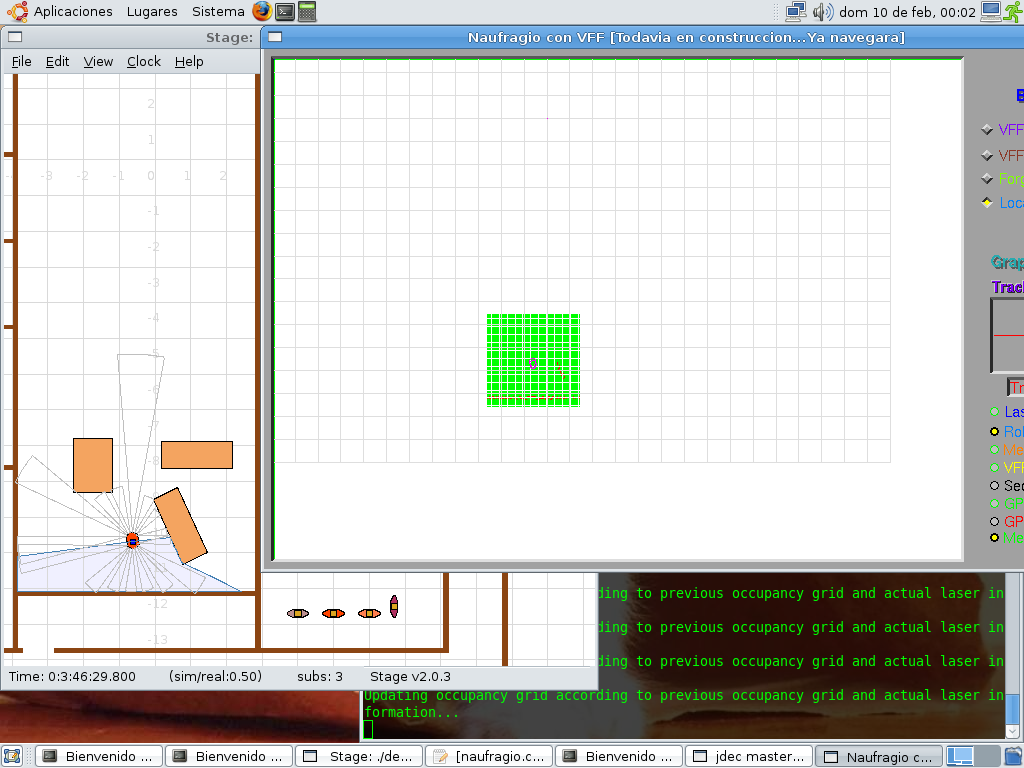

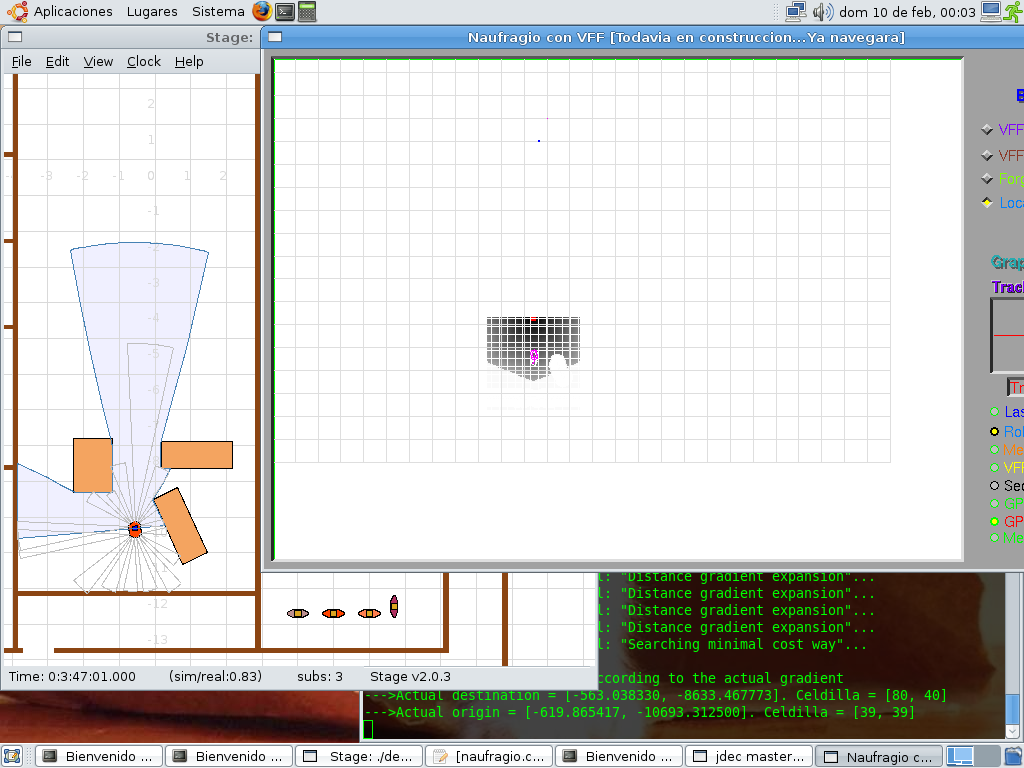

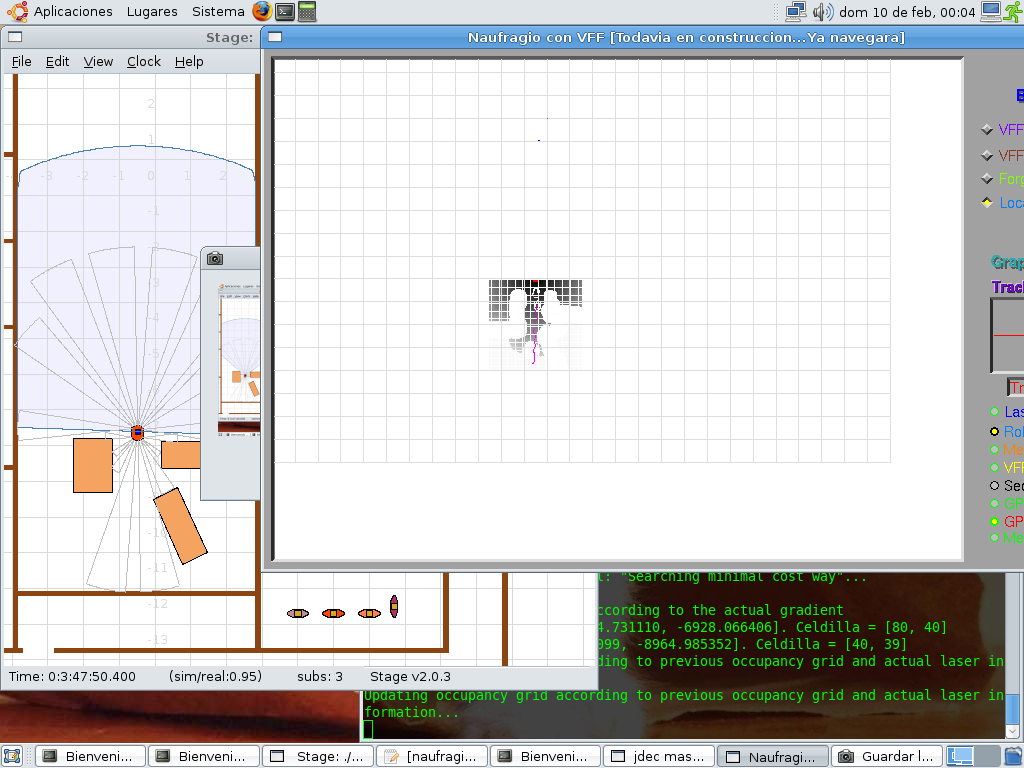

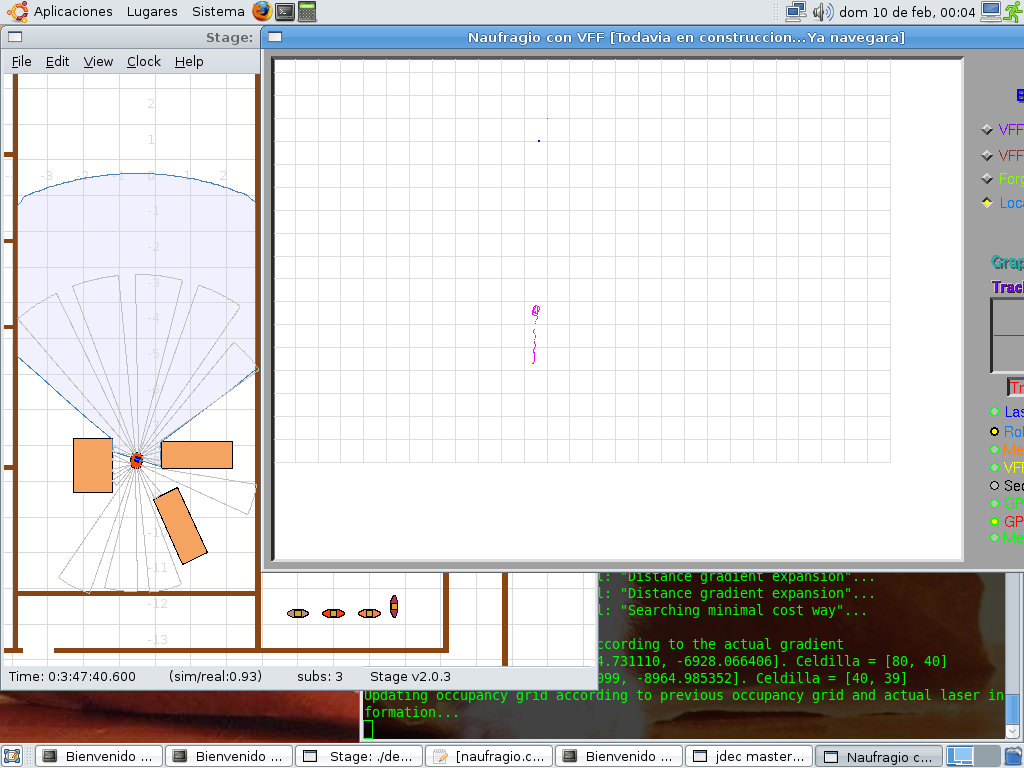

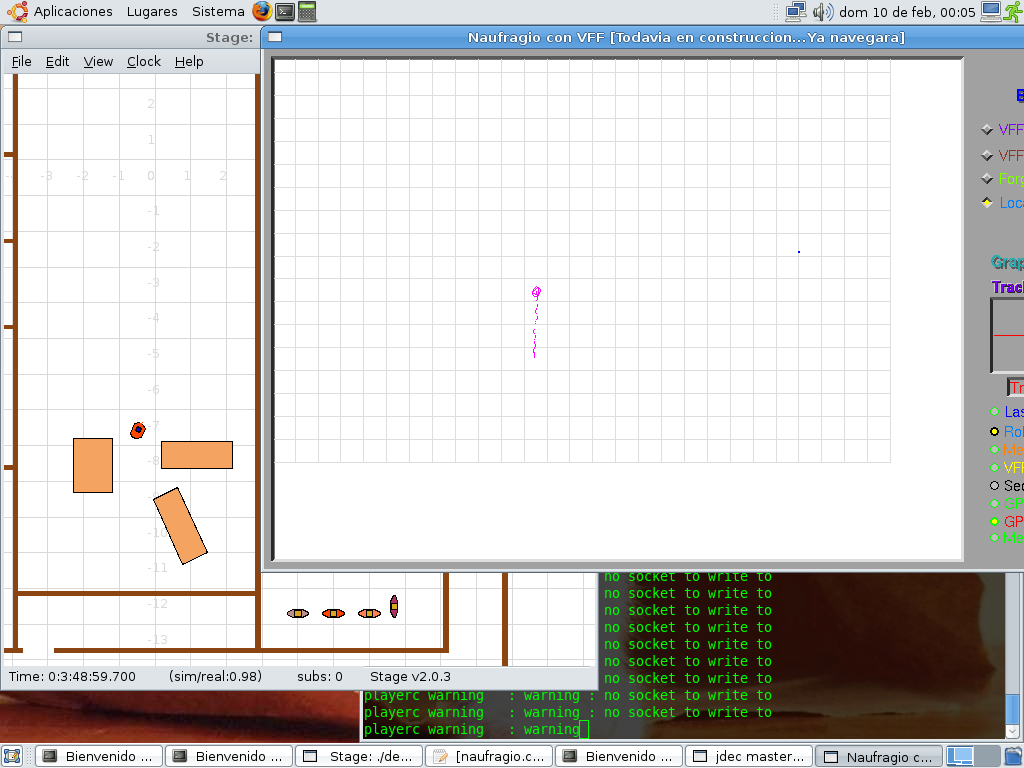

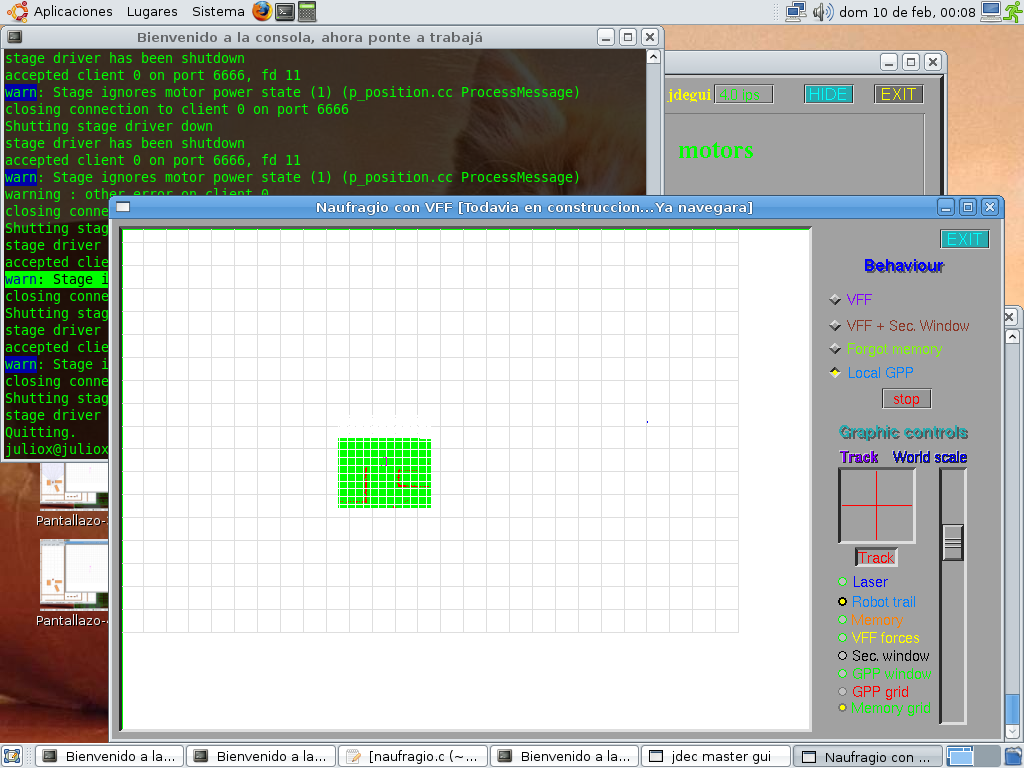

2008.02.04. Occupancy grid behavior

2008.02.04. GPP global navigation with obstacles

Obstacles. Experiment 1

Obstacles. Experiment 2

Laser VS. grid

Not forgetting

![Julio Vega's home page [Julio Vega's home page]](https://gsyc.urjc.es/jmvega/figs/cabecera.jpg)